Bayesian Network Mixture

A Mixture of BNs is a list of BNs, each one having its own weight. There are 2 types of mixtures yet.

Both inherit a mother pyagrum.bnmixture.IMixture, most functions are the same, the main difference is the purpose :

- BNMixture : Used with known BNs or databases to compare different instances of the same model. Inference and learning are the same as for BN, but for many BNs at once.

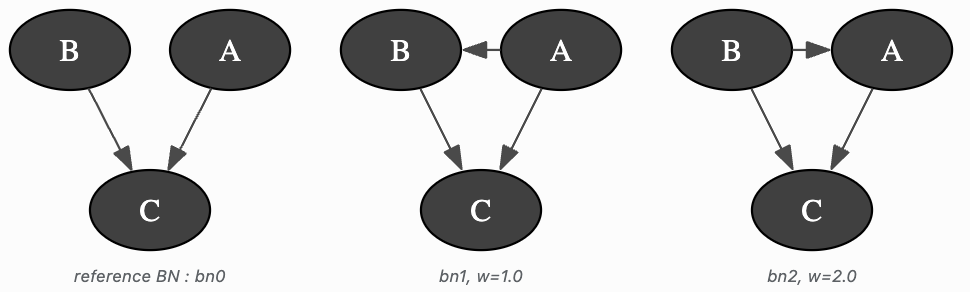

- BootstrapMixture : Used with one BN or database which becomes the reference BN. All the other BNs added are bootstrap-generated (bootstrap is a method of resampling used to get estimators when the database is limited). When learning BNs, default weight is 1.

Bootstrap sampling Bootstrap is an inference method used to get estimators for parameters (mean, quantiles values, variance). Idea : Start from a database with samples. From this database we can learn a bayesian network. But is the database reliable? Bootstrap allows us to find out. From the database, we create other databases, each one is obtained by sampling the original database with replacement until we have samples. Once we have all database, we can learn a bayesian network from each one. If the original database is reliable, there should not be too many differences between the BN learned from the original samples and the re-sampled ones. On the contrary if the original database isn’t good/accurate, we can have many differences in all learned BN. An indicator of difference is the number of arcs. For example, let’s say that is in the learned BN from the original database. We have high confidence in the arc if it appears often in the other BNs. On the contrary if the arc isn’t in the BN from the original database but in almost all other BNs, we are less confident that the arc was correctly learned. Bootstrap can allow for more accuracy about the result when the database is limited.

tutorials

Reference

- Mixture Model

BNMixtureBNMixture.BN()BNMixture.BNs()BNMixture.add()BNMixture.existsArc()BNMixture.isNormalized()BNMixture.isValid()BNMixture.loadBIF()BNMixture.names()BNMixture.normalize()BNMixture.remove()BNMixture.saveBIF()BNMixture.setWeight()BNMixture.size()BNMixture.updateRef()BNMixture.variable()BNMixture.weight()BNMixture.weights()BNMixture.zeroBNs()

BootstrapMixtureBootstrapMixture.BN()BootstrapMixture.BNs()BootstrapMixture.add()BootstrapMixture.existsArc()BootstrapMixture.isNormalized()BootstrapMixture.isValid()BootstrapMixture.loadBIF()BootstrapMixture.names()BootstrapMixture.normalize()BootstrapMixture.remove()BootstrapMixture.saveBIF()BootstrapMixture.setWeight()BootstrapMixture.size()BootstrapMixture.variable()BootstrapMixture.weight()BootstrapMixture.weights()BootstrapMixture.zeroBNs()

- Inference on mixtures

- Learning mixtures

BNMLearnerBNMBootstrapLearnerBNMBootstrapLearner.learnBNM()BNMBootstrapLearner.updateState()BNMBootstrapLearner.useBDeuPrior()BNMBootstrapLearner.useDirichletPrior()BNMBootstrapLearner.useGreedyHillClimbing()BNMBootstrapLearner.useIter()BNMBootstrapLearner.useK2()BNMBootstrapLearner.useLocalSearchWithTabuList()BNMBootstrapLearner.useMDLCorrection()BNMBootstrapLearner.useMIIC()BNMBootstrapLearner.useNMLCorrection()BNMBootstrapLearner.useNoCorrection()BNMBootstrapLearner.useNoPrior()BNMBootstrapLearner.useScoreAIC()BNMBootstrapLearner.useScoreBD()BNMBootstrapLearner.useScoreBDeu()BNMBootstrapLearner.useScoreBIC()BNMBootstrapLearner.useScoreK2()BNMBootstrapLearner.useScoreLog2Likelihood()BNMBootstrapLearner.useSmoothingPrior()