Bayesian Beta Distributed Coin Inference

|  |  |

build a fully bayesian beta distributed coin inference

Section titled “build a fully bayesian beta distributed coin inference”This notebook is based on examples from Benjamin Datko.

The basic idea of this notebook is to show you could assess the probability for a coin, knowing a sequence of heads/tails.

import time

from pylab import *import scipy.stats

import pyagrum as gumimport pyagrum.lib.notebook as gnbgum.config["notebook", "default_graph_size"] = "12!"gum.config["notebook", "default_graph_inference_size"] = "12!"Fill Beta parameters with a re-parameterization

Section titled “Fill Beta parameters with a re-parameterization”

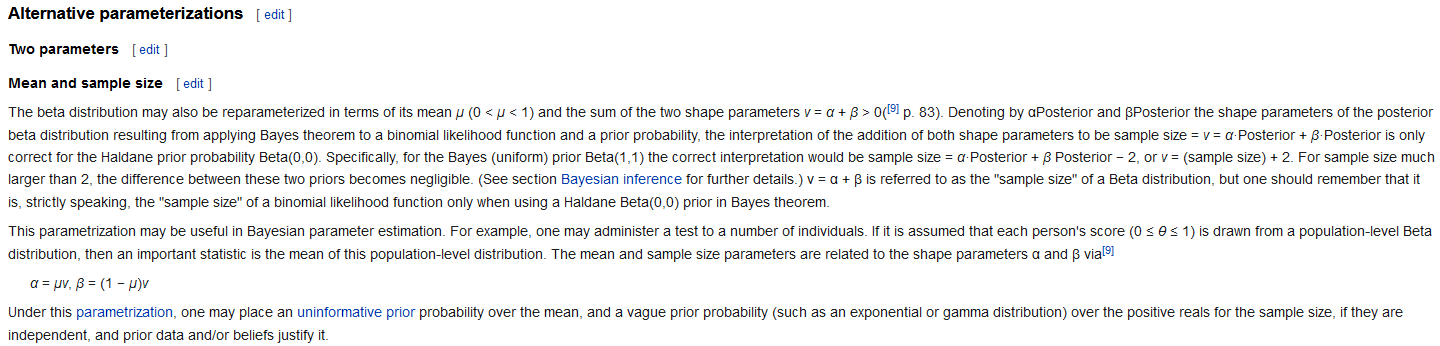

We propose a model where : mu and nu are the parameters of a beta which gives the distribution for the coins.

- below are some useful definitions

- like in Wikipedia article, we will have a uniform prior on μ and an expoential prior on ν

## the sequence of COINSserie = [1, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0, 1]NB_ = 200

vmin, vmax = 0.001, 0.999pmin_mu, pmax_mu = 0.001, 0.999pmin_nu, pmax_nu = 1, 50size_ = 16bn = gum.BayesNet("SEQUENCE OF COINS MODEL")mu = bn.add(gum.NumericalDiscreteVariable("mu", "mean of the Beta distribution", pmin_mu, pmax_mu, NB_))nu = bn.add( gum.NumericalDiscreteVariable("nu", "'sample size' of the Beta where nu = a + b > 0", pmin_nu, pmax_nu, NB_))bias = bn.add(gum.NumericalDiscreteVariable("bias", "The bias of the coin", vmin, vmax, NB_))hs = [bn.add(gum.RangeVariable(f"H{i}", "The hallucinations of coin flips", 0, 1)) for i in range(size_)]

bn.addArc(mu, bias)bn.addArc(nu, bias)for h in hs: bn.addArc(bias, h)print(bn)bnBN{nodes: 19, arcs: 18, domainSize: 10^11.7196, dim: 7963598, mem: 61Mo 89Ko 128o}bn.cpt(nu).fillFromDistribution(scipy.stats.expon, loc=2, scale=5)bn.cpt(mu).fillFromDistribution(scipy.stats.uniform, loc=pmin_mu, scale=pmax_mu - pmin_mu)

gnb.flow.clear()gnb.flow.add(gnb.getProba(bn.cpt(nu)), caption="Distribution for nu")gnb.flow.add(gnb.getProba(bn.cpt(mu)), caption="Distribution for mu")gnb.flow.display()## <https://scicomp.stackexchange.com/a/10800>t_start = time.time()bn.cpt("bias").fillFromDistribution(scipy.stats.beta, a="mu*nu", b="(1-mu)*nu")end_time = time.time() - t_startprint(f"Filling {NB_}^3 parameters in {end_time:5.3f}s")Filling 200^3 parameters in 2.640sfor h in hs: bn.cpt(h).fillFromDistribution(scipy.stats.bernoulli, p="bias")Evidence without evidence

Section titled “Evidence without evidence”gnb.showInference(bn, size="17!")print(bn)BN{nodes: 19, arcs: 18, domainSize: 10^11.7196, dim: 7963598, mem: 61Mo 89Ko 128o}Evidence with the sequence

Section titled “Evidence with the sequence”coin_evidence = {f"H{i}": serie[i] for i in range(len(serie))}

gnb.showInference(bn, evs=coin_evidence)ie = gum.LazyPropagation(bn)ie.setEvidence(coin_evidence)ie.makeInference()from scipy.ndimage import center_of_mass

idx = ie.posterior("bias").argmax()[0][0]["bias"]map_bias = bn["bias"].label(idx)

com = center_of_mass(ie.posterior("nu").toarray())[0]

idx = ie.posterior("mu").argmax()[0][0]["mu"]map_mu = bn["mu"].label(idx)

print(f"MAP for mu : {map_mu}")print(f"center of mass for nu : {com}")print(f"MAP for bias : {map_bias}")MAP for mu : 0.4473center of mass for nu : 26.67889867211198MAP for bias : 0.4373Smaller serie

Section titled “Smaller serie”## With a smaller serieserie = [ 1, 0, 0, 0, 0, 0, 1,]

bn = gum.BayesNet("SEQUENCE OF COINS MODEL")mu = bn.add(gum.NumericalDiscreteVariable("mu", "mean of the Beta distribution", pmin_mu, pmax_mu, NB_))nu = bn.add( gum.NumericalDiscreteVariable("nu", "'sample size' of the Beta where nu = a + b > 0", pmin_nu, pmax_nu, NB_))bias = bn.add(gum.NumericalDiscreteVariable("bias", "The bias of the coin", vmin, vmax, NB_))hs = [bn.add(gum.RangeVariable(f"H{i}", "The hallucinations of coin flips", 0, 1)) for i in range(len(serie))]

bn.addArc(mu, bias)bn.addArc(nu, bias)for h in hs: bn.addArc(bias, h)

bn.cpt(nu).fillFromDistribution(scipy.stats.expon, loc=2, scale=5)bn.cpt(mu).fillFromDistribution(scipy.stats.uniform, loc=pmin_mu, scale=pmax_mu - pmin_mu)

bn.cpt("bias").fillFromDistribution(scipy.stats.beta, a="mu*nu", b="(1-mu)*nu")

for h in hs: bn.cpt(h).fillFromDistribution(scipy.stats.bernoulli, p="bias")

coin_evidence = {f"H{i}": serie[i] for i in range(len(serie))}

gnb.showInference(bn, evs=coin_evidence)